How To Use AI Chatbots Effectively

TL;DR:

AI chatbots like ChatGPT can be game changers for getting started with tasks from trip planning to essay drafting but they often produce convincingly written content that includes errors, made-up facts, or fake citations. Real examples in Ireland (planning inspector, law cases, student work, media hoaxes) show what can go wrong. To use AI wisely; use it for structure, insert your own knowledge, verify with credible sources, refine with AI editing, and always do a final human review.

Introduction

Over the couple of last year, conversational AI tools like ChatGPT have moved from being futuristic novelties to everyday companions. People use them to draft emails, write essays, debug code, plan trips, and even brainstorm business ideas. I’ve been experimenting with them for both personal and professional tasks, and I’ve learned a lot about how to use them effectively and just as importantly, how not to.

Starting from a Blank Page

For me, the hardest part of any project has always been the blank page. Back in school and university, I’d often stare at an empty Word document, unsure how to start an essay or project. That paralysis hasn’t gone away in professional life either.

This is where AI chatbots shine: they give you something to react to. Whether it’s a draft outline, a few paragraphs of content, or a list of ideas, having text on the page makes it easier to get moving. Recently, I even used ChatGPT to help plan my honeymoon in Tuscany. It didn’t make the final decisions for me, but it provided itineraries, suggestions for towns to visit, and food tours to consider. Once I had a starting point, I could shape the plan into something personal.

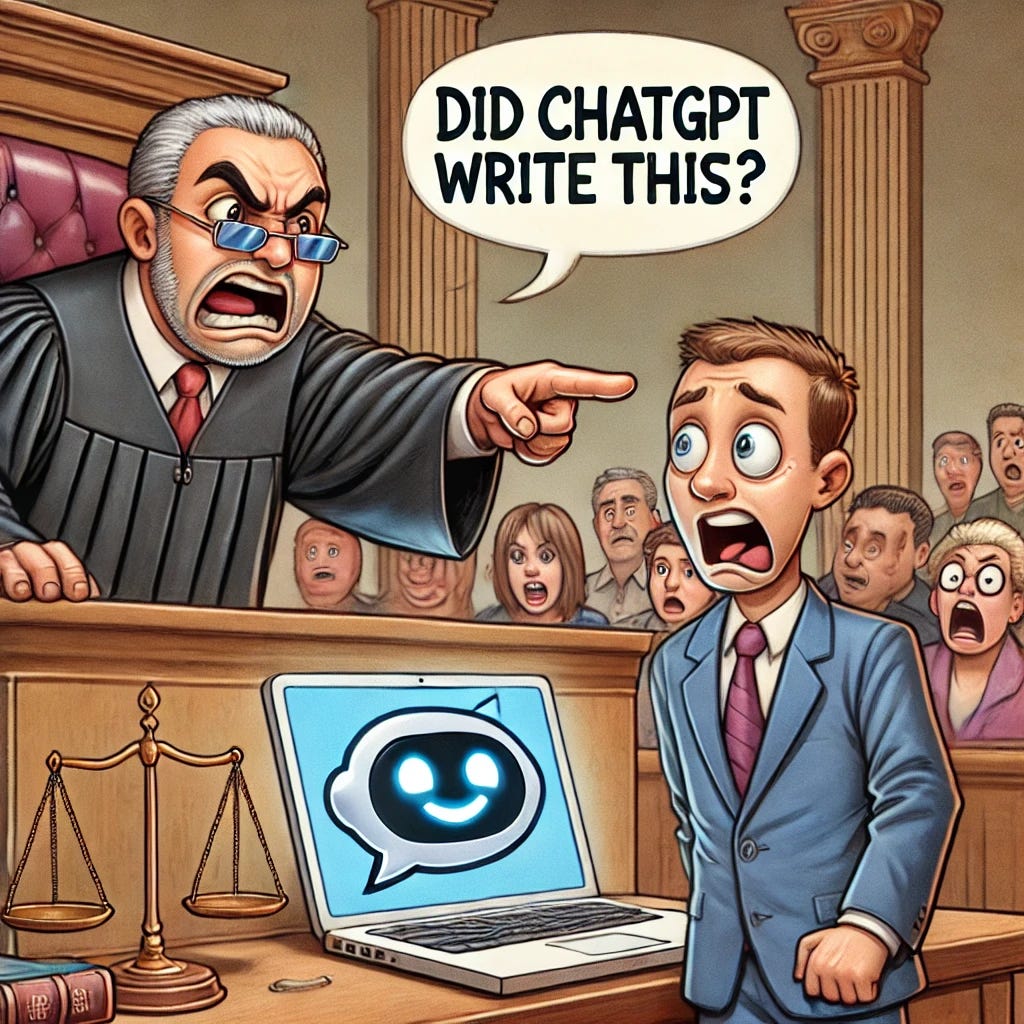

The Danger of Copy-Paste

That said, using AI uncritically can be risky. There are countless examples of professionals being embarrassed—or worse—because they copied and pasted AI-generated text without checking it.

In Ireland alone, we’ve seen several cases. A planning inspector with An Bord Pleanála had to be removed from a case after it emerged, they had used ChatGPT to draft a report on the Aughinish Alumina plant expansion. The report was scrapped, the board banned unapproved AI tools, and the issue is now part of a High Court challenge [1].

We have also seen instances where Judges had to caution litigants after spotting legal arguments that seemed to come straight from ChatGPT complete with invented terminology. In Reddan v An Bord Pleanála, one litigant used a term in their submissions that the judge said was “not familiar in Irish jurisprudence” and sounded like it came from an AI source before warning that “the general public should be warned against the use of generative AI… in matters of law” [2].

Academia hasn’t been immune either. The University of Galway reported dozens of students caught submitting essays written (or heavily assisted) by AI, often with fake references that didn’t exist [3]. Most of these cases were flagged because the submissions contained “telltale signs” of AI use such passages that looked polished but lacked depth, or references that, when checked, simply didn’t exist. Lecturers also noticed unusual patterns such as identical answers across multiple students, or bibliographies that included fake journal titles. However, educators are stressing that students aren’t being banned from AI completely, but they need to understand its limits. AI text can be a useful writing aid, but uncritical use risks academic penalties.

In the corporate/media world, The Irish Times had to issue a public apology after publishing an opinion piece titled “Irish women’s obsession with fake tan is problematic” that turned out to be a hoax with the author not existing and much of the article, along with its fake byline photo, was generated using AI [4]. The paper admitted it had “fallen victim to a deliberate and coordinated deception” and tightened editorial checks. Around the same time, broadcaster Dave Fanning filed a defamation case after his photo was wrongly used in coverage of a sexual misconduct trial involving another presenter, with his legal team suggesting an AI-powered content system may have been at fault [5].

All these incidents have one thing in common: people trusted AI content without properly checking it.

My Process for Using Chatbots Safely

Over time, I’ve developed a workflow for using tools like ChatGPT that balances speed with accuracy:

Use AI for structure and not substance: I let the model generate a first draft, an outline, or a brainstorming list.

Add my own creativity and expertise: I expand on the draft with examples I know, or insights from my own work and studies.

Verify with proper sources: I fact-check using textbooks, peer-reviewed papers, and trusted websites. If the chatbot suggests something questionable, I double-check it.

Leverage AI again for refinement: Once I’ve pulled everything together, I use AI tools for editing: checking grammar, spelling, and sometimes even spotting logical gaps.

Final human review: I always do a last check myself. If it doesn’t make sense to me, it’s not going out.

This process makes sure I get the productivity benefits of AI which are speed, structure, and idea generation without falling into the trap of over-reliance.

The Real Value of Chatbots

To me, the real power of tools like ChatGPT isn’t about replacing human thinking—it’s about unlocking it. They remove the fear of the blank page, help me get my thoughts moving, and give me a base to work from. But the responsibility for accuracy, nuance, and creativity still lies with me.

As these tools become more embedded in our work and personal lives, the people who succeed won’t be those who simply use them, but those who know how to use them wisely.

P.S. I have added in a couple of examples of ChatGPT use, one of them obvious, the other not so much ;) Please let me know by commenting below.

References:

[1] The Journal – “An Bord Pleanála removes inspector for using ChatGPT in Aughinish Alumina case” (May 2025)

[2] Mason, Hayes & Curran - judge suspects man used AI in legal case against planned driving range near his home" (June 2025)

[3] Irish Independent – “University of Galway records 49 AI cheating cases” (October 2024)

[4] The Irish Times – “Editor apologises after AI-generated hoax op-ed published” (May 2023)

[5] Irish Examiner – “Dave Fanning sues over AI error linking him to sexual offence case” (Jan 2024)

Great article Stephen ! Will definitely use your advice . Thank you !